In the coming years, Deepfake will become one of the primary tools in the hands of cybercriminals. The technique of merging images, videos, and voice – often used for entertainment purposes – can also be a dangerous instrument for disinformation and attacks aimed at extorting data or money. Fighting such threats has become the subject of research for many scientists. Researchers from the University of Washington in St. Louis have developed an AntiFake defensive mechanism that prevents unauthorized speech synthesis, making it difficult for artificial intelligence to decipher key voice features. The demand for such tools will grow with the development of the generative AI market.

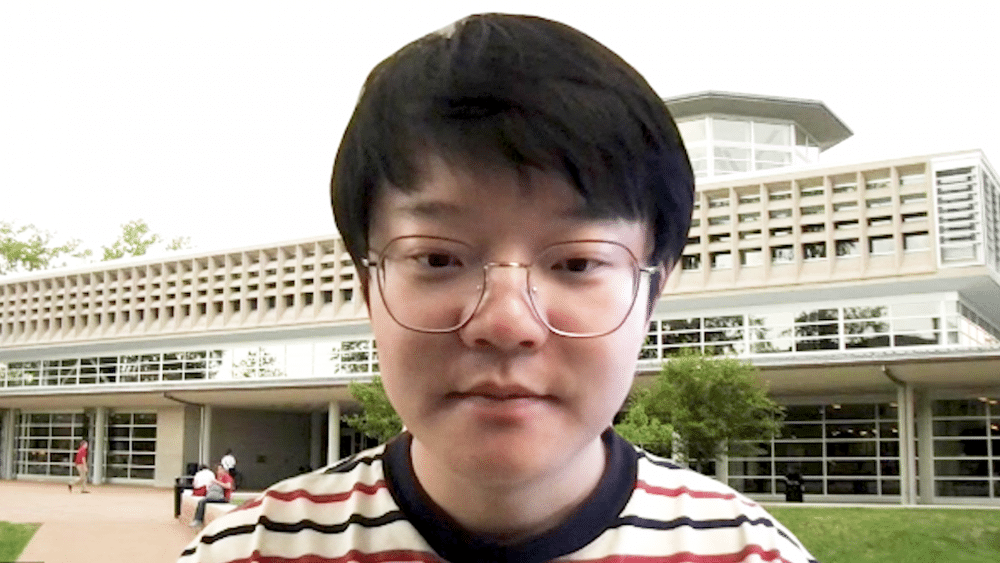

“The term ‘Deepfake’ consists of two parts. The first one, ‘deep’, refers to deep neural networks, which is the most advanced aspect of machine learning. ‘Fake’ means creating a false image of a face or voice. In our context, this means that a cybercriminal can use deep neural networks to counterfeit speech of a certain person,” says Zhiyuan Yu, a doctoral student at the Department of Computer Science at the University of Washington in St. Louis, in an interview for Newseria Innovations. “Application of Deepfakes for malicious attacks can take many forms. One of them is using them to spread false information and hate speech, also based on the voice of well-known people. For example, a criminal used President Biden’s voice to declare war on Russia. We’ve also seen cases where a cybercriminal used a user’s voice to pass identity verification in a bank. Such incidents have become part of our life.”

WithSecure experts foresee a surge in disinformation issues using Deepfake technology in 2024. This will mainly be due to the fact that it is a presidential election year in the USA and elections to the European Parliament.

“In the face of threats related to Deepfakes, we propose active counteraction with the AntiFake technology. The systems solutions created so far are based on threat detection. They use speech signal analysis techniques or physics-based detection methods to distinguish whether a given sample is an authentic statement of a person or generated by artificial intelligence. However, our AntiFake technology is based on a completely different proactive method,” says Zhiyuan Yu.

The method used by the creators is based on adding small disturbances to speech samples. This means that when a cybercriminal uses them for speech synthesis, the generated sample will sound completely different from the user. Therefore, it will not be possible to deceive the family or bypass voice identity verification with its help.

“Few people have access to Deepfake detectors. Let’s imagine that we receive an email that contains a voice sample, or we receive such a sample on a social media platform. Few people will then look for a detector on the internet to check if the sample is authentic. In our opinion, it is above all important to prevent such attacks. If the criminals fail to generate a voice sample that sounds like the voice of a certain person, the attack will not take place at all,” emphasizes the researcher.

From a technological standpoint, the solution is based on adding disturbances to sound samples. In designing the system, the creators had to grapple with several project challenges. The first was what kind of disturbances should be imposed on sound so that they are inaudible to the ear, yet effective.

“It’s true that the more disturbances, the better the protection, but when the original sample consists only of disturbances, the user cannot use it at all,” says Zhiyuan Yu.

It was also important to create a universal solution that would work with various types of speech synthesizers.

“We applied a model of ensemble learning for which we collected various models and determined that the speech encoder is a very important element of the synthesizer. It converts speech samples into vectors that reflect the speech characteristics of a person. It is the most important element of the synthesizer determining the identity of the speaking person in the generated speech. We wanted to modify the embedded fragments using the encoder. We used four different encoders to ensure universal application to different voice samples,” explains the scientist from the University of Washington in St. Louis.

The source code of the technology developed at the Department of Computer Science at the University of Washington in St. Louis is publicly available. In tests conducted on the most popular synthesizers, AntiFake showed 95% effectiveness. Additionally, this tool was tested on 24 participants to confirm that it is accessible to different populations. As the expert explains, the main limitation of this solution is that cybercriminals can try to circumvent the security measures, removing disturbances from the sample. There is also a risk that slightly differently constructed synthesizers will appear, for which such conceived technology will no longer be effective. According to experts, work will continue to eliminate the risk of attacks using Deepfakes. Currently, AntiFake can protect short recordings, but there is nothing to prevent it from being developed to protect longer recordings, and even music.

“We are convinced that soon many other developments will emerge, aimed at preventing such problems. Our group is currently working on protection against unauthorized generation of songs and musical pieces, as we receive messages from producers and people working in the music industry asking if our AntiFake mechanism can be used for their needs. We are therefore constantly working on extending protection to other fields,” emphasizes Zhiyuan Yu.

Future Market Insights estimated that the value of the generative artificial intelligence market will reach $10.9 billion in 2023. Over the next 10 years, its growth will be spectacular, maintaining an average level of 31.3% per year. This means that by 2033, the market revenue will exceed $167.4 billion. The authors of the report, however, point out that content generated by artificial intelligence can be used maliciously, for example in fake videos, disinformation, or intellectual property violations.