The upcoming elections in over 50 countries, including the USA and Poland, are likely to encourage creators of harmful content to increase their activities using artificial intelligence. Most deepfake content will probably emerge later this year. Analysts have inspected which areas in the digital world are incubators for the activities of “bad actors,” and have created a map for them. These small platforms are the main source of origins and distribution of harmful content. In this context, the EU’s Digital Services Act can be assessed as a misstep, as these small platforms will be practically beyond the regulations’ control. Scientists suggest undertaking actions based on realistic scenarios in the fight against this phenomenon, and elimination of misinformation isn’t one of them. Hence, it is better to limit the effects of disinformation.

Experts comment on what unchecked artificial intelligence in social media can achieve. Considering the threats and ‘bad actors,’ and the elections set to take place this year in many countries, including Poland, the UK, certainly the USA, and India, where a total of 3 billion people will vote, we face the issue of fake news and disinformation on the internet. Many things can go wrong with the capabilities of artificial intelligence, its unrestricted operation 24/7, its potential, and its ability to create new things in a human-like manner, says Prof. Neil Johnson from George Washington University, who leads the Dynamic Online Networks Laboratory, which specializes in mapping the internet.

The EU has already noted the threats posed by the uncontrolled use of artificial intelligence. One instrument designed to control this phenomenon is the Digital Services Act (DSA) which came into effect on February 17th. These regulations, dubbed the ‘internet constitution,’ comprise EU-wide regulations concerning digital services, intermediaries for consumers, and goods, services, and content. The DSA’s introduction is intended to build a safer, fairer online world. For the largest internet platforms, these regulations came into effect last August.

These new regulations impose many obligations on various types of platforms, including their role in facilitating user dissemination of false, illegal, and harmful content or perpetration of e-commerce fraud. In recent years, platforms have become the most important sites for exchange of information and opinions, used by hundreds of millions, or even billions, of users worldwide.

The problem, however, is that there are no clear criteria indicating when we are dealing with harmful content.

“All the discussions about policy directions, often led by the platforms themselves, who only have knowledge about themselves, always rely on ambiguous criteria referring to “bad” entities on the web,” observes Prof. Neil Johnson. “Our study aimed to identify where these threats hide, where harmful parties operate and where their communities are. Imagine we want to solve traffic issues in London, New York, or Warsaw – how can we do it if we don’t have a city map?”

The researchers decided to conduct a quantitative scientific analysis to predict how these entities will exploit artificial intelligence globally.

“This is the first study where we looked at the numbers and location of AI activity on a larger scale. If we watch any political debate in the USA, Europe, or the UK regarding the internet and AI, these discussions are vague and it seems the politicians don’t fully understand what is happening – and I don’t blame them for it. I have spent my entire life trying to understand this issue, and they have only two hours to familiarize themselves with the topic. The problem, however, is that they are the decision-makers,” says the physicist from George Washington University. “AI combined with the internet will change everything because it’s new technology available to everyone worldwide. The first study on this topic is important. We were surprised that no one else had tackled this issue before.”

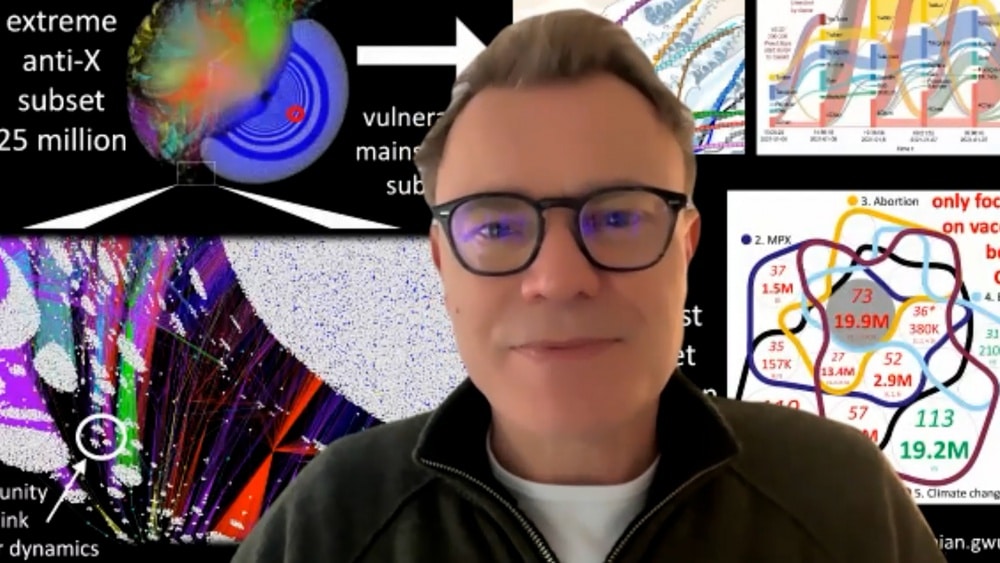

The researchers initially identified several entities that could be classified as “bad actors.” They then checked their connections, their audiences, and their friends, and subsequently created a map for them.

“We expected that, by analogy to a city, all the good people live downtown, we have a large population in the center, and all the suspicious stuff happens on the outskirts. However, we obtained results contrary to this assumption. Apart from the existence of large platforms, such as Facebook, X (formerly Twitter), or TikTok, which are main platforms with vast numbers of people within communities centered around parenting or food, there are usually communities on smaller platforms connected to these large ones. Together with the others, they form an ecosystem where AI serving harmful entities can run free,” explains the Dynamic Online Networks Laboratory’s researcher. “Two to three billion people worldwide belong to these communities; pizza lovers or parents, for example.”

While the largest platforms have the greatest significance in terms of potential damages, the place where such artificial intelligence originates and where the “bad actor” communities will operate, spreading racism, hatred, acting against women, governments, or adherents of specific religions, won’t necessarily be the same. The researchers discovered that such communities will operate as a reservoir of harmful content on smaller platforms, like Telegram or Gab, which are not covered by DSA regulations. In the researchers’ view, this is a misguided solution that the USA might also replicate.

“As there was no map available when developing the regulations, the assumption was made that larger platforms have a bigger impact. This was simply an assumption, and as in any other case in science, assumptions cannot simply be made without verification. Our study aimed to test these assumptions, and the conclusions turned out to be contrary,” emphasizes Prof. Neil Johnson. “Everyone focuses on what false content can be distributed. But what matters most is their location. We’ve determined where threats hide. On the same principle, the location of vehicles in a city is more important for understanding traffic than knowing there are two BMWs and a Toyota.”

The expert highlights that in the disinformation discussion, two strategies dominate: total elimination or control of the problem. In his view, the first is doomed to failure from the start. Such action would require a vast scale of detailed, costly measures without any promise of efficacy.

“Let’s move on to the second strategy, which is controlling the problem. It’s a more controversial strategy as most people would prefer to eliminate these problems rather than control them. But, for example, we can look at COVID-19 – we didn’t totally eliminate it, but we can say we’ve controlled it. The crux of the matter is that for governments, entities, or platforms, it’s a strategy they should avoid. It has become politicized – but as a scientist, I can say based on the study’s results – in the political arena, the argument that fake information should be eliminated will always sound nobler, always seem like the better way out. The problem is that without a map, and as far as I know, we’re the only ones who have such a map, having understood the problem from a real-life perspective, its elimination is impossible,” stresses the Dynamic Online Networks Laboratory’s researcher.

According to estimates made during the study, by this summer, the activities of “bad actors” fueled by artificial intelligence will become a daily occurrence. The researchers made this prediction based on analogy with similarly technology-dependent incidents in 2008 and 2013. The first involved algorithmic attacks on American financial markets and the second, Chinese cyber-attacks on US infrastructure. This is a particularly ominous forecast, especially in the context of upcoming elections.

“These are going to be really interesting elections because previously, we had to deal with a few bots on Twitter and a couple of odd websites. It is unknown what their real impact was in the US presidential elections in 2016 and 2020. Now, the situation with artificial intelligence appears completely different – it can be used on a larger scale, with higher speed. Most of the US population is online and if they don’t have social media accounts, their siblings, parents, or children do, so they are indirectly influenced. This influence affects the entire population, unless someone lives in a cabin deep within Montana. We’re dealing with new technology and, without judging who is on the right side here, those who make better use of it will win,” warns Prof. Neil Johnson. “In the countries where this year’s elections will take place, we will be receiving voice messages which are deepfakes. It will be a real online battle and an arms race, which will be about who uses this technology more. Should such tools be used? Of course not, but that doesn’t change the fact they will be.”