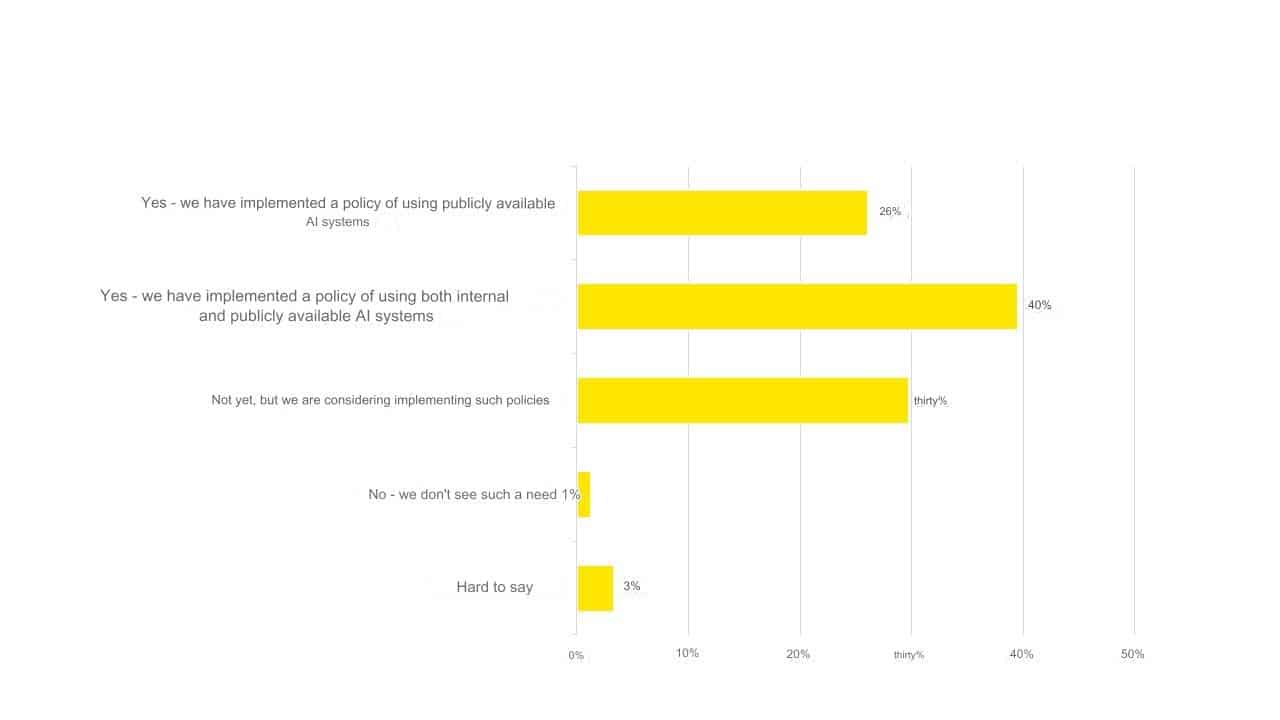

Artificial intelligence (AI) can be used both for cyberattacks and for defending against them. According to a study by EY – “How Polish Companies Implement AI” – nearly all respondents (92%) consider the aspect of cybersecurity when implementing this tool. However, 37% of them take this area into account only to a limited extent. The growing awareness of companies in the field of artificial intelligence is evidenced by the fact that 66% of the surveyed have implemented procedures regarding its use at least for generally available systems, and another 30% are considering introducing such rules. Interestingly, domestic companies declare that they manage risk appropriately. Only 3% of them did not consider the threats posed by artificial intelligence.

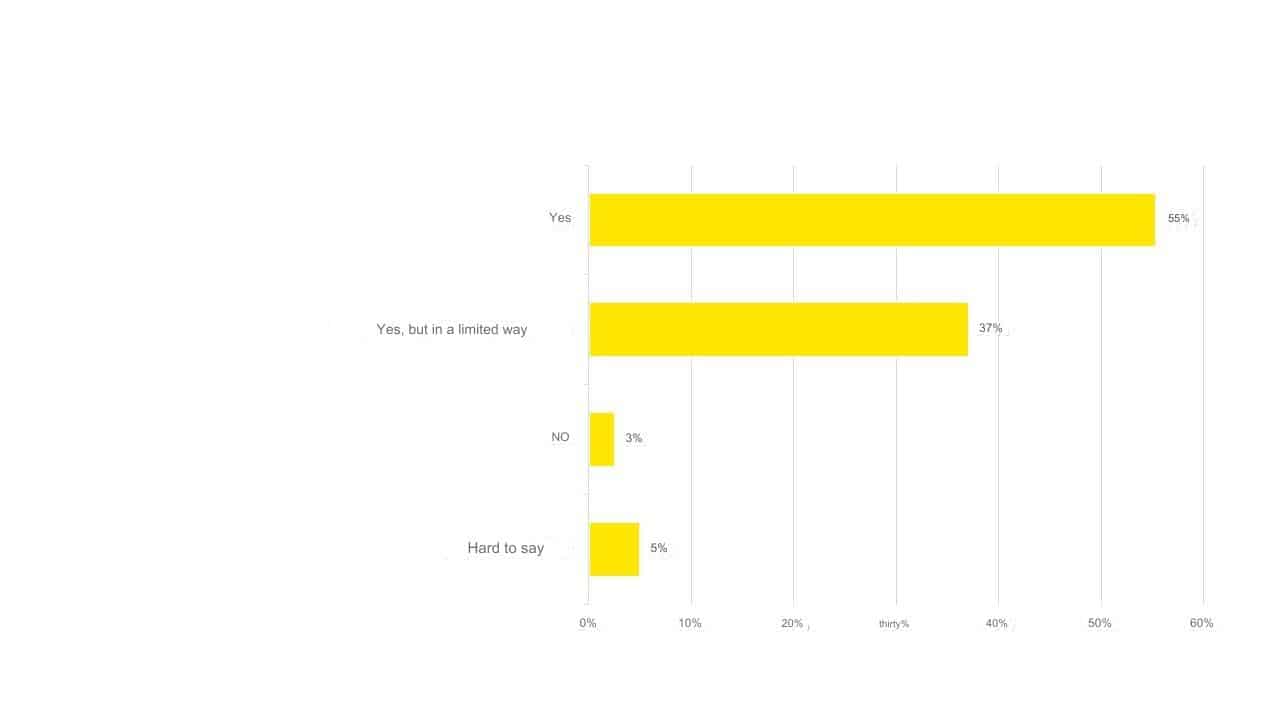

The use of AI-based solutions in many companies is becoming the norm and an opportunity to significantly increase their efficiency. However, like any technology, it also brings both opportunities and threats, including virtual risks. The results of the EY study – “How Polish Companies Implement AI” – indicate that for domestic entrepreneurs, issues related to cybersecurity when implementing AI tools are significant. Over half of them (55%) consider this aspect, and another 37% take it into account to a limited extent. Only 3% of respondents completely ignore this topic.

“With the increase in the applications of artificial intelligence, new threats emerge that require protection of networks and information systems from attacks. Companies in Poland declare that they analyze potential risks, but in practice, in many cases, the situation looks less optimistic. AI is mainly seen as a technological novelty and associated with solutions such as chatbots. However, it is a completely new area of risk, and many companies are not prepared for cyber threats. This includes attacks using personalized phishing messages, attempts at fraud using messages containing voice recordings strikingly similar to the voices of known individuals, or easier coding by hackers wanting to find loopholes in software. The increasingly free and dangerous use of AI tools is leading to a growing problem with the leakage of sensitive data,” explains Piotr Ciepiela, Partner at EY, Leader of the Cybersecurity Team in the EMEIA region.

Graph 1. Were aspects of cybersecurity analyzed when implementing AI-based tools?

New Policies on AI Tools

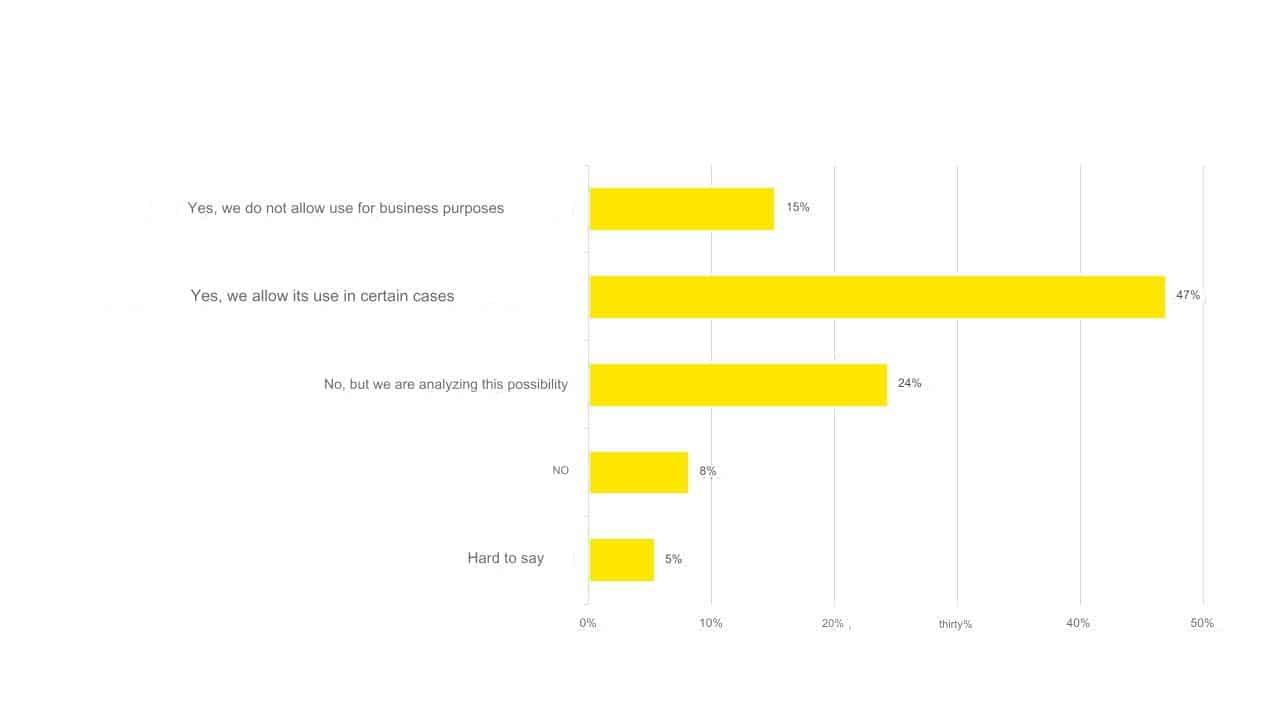

An important type of action in the field of cybersecurity is the introduction of appropriate procedures regarding the use of AI systems. According to the EY study, the most respondents (40%) have implemented a policy of using both internal and generally available AI tools, such as chatbots. Under the control of 26% of companies is the use of external systems only, and 30% are still considering adopting any rules.

Implementing policies on the use of generally available AI solutions will allow the organization to protect itself from the negative effects of their use, such as the leakage of restricted or sensitive data. If an employee, without supervision, uses external tools for work duties and shares confidential information or details of important projects with a chatbot, it could expose the company to unforeseen repercussions. Therefore, it is important to use AI systems responsibly and in accordance with the ethical principles of the company, which will help minimize potential risks.

Graph 2. Were detailed rules and procedures for using AI systems implemented?

Minimizing Risks

Companies in Poland adopt different approaches to managing the risk associated with the implementation of an AI system. Nearly one-third of the respondents (31%) identified the most important threats related to its implementation in their organization and are trying to prevent them. Another group consists of enterprises that conduct a risk analysis before implementing a specific system (26% of responses). A significant proportion (27%) are respondents who are aware of general, typical risks associated with AI, not focusing on aspects specific to their organization. Meanwhile, every tenth company (10%) manages risk when adopting AI tools, using the same approach as when implementing any other IT system.

The weakest link in the process of maintaining a high level of cybersecurity is employees – their insufficient level of knowledge or mistakes most often lead to the company being exposed to cyberattacks. To counteract this, organizations implementing AI tools take precautions in advance. One of the most popular methods is the introduction of procedural solutions (e.g., training for employees), which was indicated by 36% of respondents. Another option is to increase the number of solutions at the tool level (31% of responses), and one in five companies (20%) has implemented both procedural and tool-based safeguards.

“Although the implementation of AI tools is becoming more common in various industries, for many organizations it means a big change due to the complexity of such a project. It is definitely necessary to train all employees so that they know clear rules for using both internal and generally available artificial intelligence systems. It is also worth conducting a detailed risk analysis before the actual implementation of AI. This will build an effective action strategy and identify areas that require special attention, such as personal data protection or legal issues,” advises Patryk Gęborys, Partner at EY Poland, Information Security and Technology Team.

Graph 3. Were policies introduced on the use of generally available AI-based tools in the company’s policy?

About the Study

The study – “How Polish Companies Implement AI” – was prepared on behalf of EY Poland by Cube Research in August-September 2023 on a sample of 501 large and medium-sized enterprises operating in the manufacturing, service, and trade sectors.